While it was believed that complex computations on Bitcoin were impossible only last year, the announcement of the BitVM project has come to challenge that.

The recently launched BitVMX whitepaper marks a significant milestone in the journey of having complex computations on Bitcoin, promising not only to overcome the limitations of its predecessor but also to redefine the possibilities of smart contract execution within the Bitcoin ecosystem.

In this article, we deep dive into the differences between BitVMX and BitVM, including the computational paradigm, gates, CPU, and data availability.

Disputable Computation Paradigm

BitVM was the first attempt to bring the Disputable Computation paradigm to Bitcoin, it was conceived in October 2023 by Robin Linus.

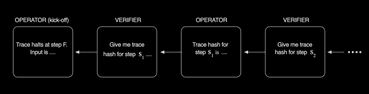

Under this paradigm, if all involved parties agree on the computation result, no computation is performed on-chain. However, in case any party disagrees, an on-chain interaction begins to formally and efficiently solve the dispute: in the worst case, only a single disputed gate, wire, or step of the computation is verified on-chain.

The Disputable Computation paradigm was first envisioned by the TrueBit project back in 2017 to be used for arbitration and later applied to the creation of blockchain bridges. Due to its average low computing cost, the paradigm was chosen by Optimistic Rollups to build 2nd layers on altchains.

The closest protocol to BitVMX is BitVM. However, BitVM does not refer to a single concept, but four:

- The BitVM Gate-based protocol (a.k.a. tapleaf circuits or “BitVM0”), that is partially defined in the BitVM whitepaper.

- The BitVM CPU design that was informally presented by Robin Linus in several and interviews (currently called BitVM1).

- The partially implemented BitVM CPU, now deprecated, also authored by Robin Linus.

- The BitVM2 single-challenge protocol, is partially defined here.

We’ll refer to the CPU when disambiguation is required. Since there is no full formal description of this BitVM CPU, we can only make our comparisons against the straightforward design we reconstruct from Linus’s interviews.

BitVMX vs BitVM (gate-based)

The BitVM gate-based protocol (BitVM0) is considered only of theoretical interest, it’s impractical for all interesting use cases, such as SNARK verification of running a sidechain light client.

In contrast, BitVM CPU (a.k.a. BitVM1) and BitVMX are fully practical.

BitVMX vs BitVM CPU

The BitVMX CPU is similar to the BitVM CPU in that it searches through the trace of the program execution.

The “circuit” of BitVM is replaced by the trace of all instructions executed, where all loops are unrolled. However, the CPU protocols differ in how information is exchanged, and instructions and memory hashed.

In contrast with the BitVM CPU, BitVMX does not require the creation of a Merkle tree of instructions, a Merkle tree of memory words, or even the use of the taproot tree!

This makes BitVMX far more efficient to set up and evaluate.

Memory and Speed

BitVMX prover and verifiers build only a sequential hash chain that includes the outputs produced at each step plus each previous step hash. By using memory-mapped registers, the output of any instruction consists of two words written to memory, one of them being the program counter. This information is enough to perform an n-ary search over the trace.

To avoid the need for memory merkelization, BitVMX uses a new challenge/response protocol to detect memory access faults based on tracking the last time each memory cell was written. Combined with shorter hash inputs, BitVMX’s linear hashing method enables the use of arbitrary amounts of memory, and runs the virtual processor locally more than two times faster than the BitVM CPU.

BitVMX construction is flexible enough to support the implementation of most of the existing CPUs, ranging from 8-bit to 64-bit cores.

For example, it’s easy and efficient to implement a MIPS core, or even a 64-bit RISC-V processor and run a Linux application on top of BitVMX. In contrast, BitVM CPU is designed for a single CPU type that is non-standard.

RISC-V programs require translation to be run in the BitVM CPU. While BitVMX is flexible to run both RISC and CISC cores, RISC processors result in a simpler implementation.

CISC processors may require longer scripts, increasing the challenge/response cost. Implementing a CISC processor on BitVMX would probably require the prover to show intermediate results for microinstructions, and the verifier to challenge microinstructions instead of full instructions.

Trace Search

BitVM CPU is designed to perform a binary search on the trace. This is an inherent limitation of BitVM CPU, as adding additional branches in the tree increases the complexity of the design. The BLAKE3 hash function implemented in the BitVM CPU only hashes two 32-byte elements into one digest (a 2:1 compression).

Adding more branches at each level of the tree requires some tricks. You can stack this 2:1 compression function multiple times (but you need to allow the verifier to challenge the intermediate hashes), or you can expand the size of the hash function input message. This last option is prohibitive due to the consequential expansion of the Bitcoin script.

Currently, BLAKE3 2:1 compression consumes 100 Kbytes of script. A 4:1 compression would reach the standard transaction limit.

An additional trick (also used by BitVM2) is to split BLAKE3 computation into sub-functions and let the prover provide signed midstates for the verifier to choose which sub-function to challenge. This trick could reduce the script size 50%. However, all these tricks would make the BitVM CPU much more complex for limited benefits.

On the contrary, BitVMX allows a smooth tradeoff between worse-case round complexity and the amount of data included in transactions by allowing the free selection of the branch factor in the trace search. For example, using a binary search over a 32-bit space trace BitVMX requires 70 transactions in the worst case, while BitVM requires 40. But using a 4-way search, we can reduce it to 36, outperforming BitVM CPU.

Remarkably, we found an optimization to BitVMX to reduce the number of transactions to 20 using a 16-way search (4 bits per query) without increasing the transaction payload assuming both prover and verifier act rationally during the dispute! This means that under approximately equally-sized payloads, the BitVMX worst-case challenge period would be half of BitVM’s period. By increasing the number of hashes revealed in each iteration of the search we can reduce the number of rounds of the search. The implementors of BitVMX should choose thor sweep spot between the challenge period and transaction cost.

BitVMX vs BitVM2

While the BitVM CPU attempts to run any user-defined program, BitVM2 focuses on running only a SNARK verifier, which, in turn, is capable of verifying the correct execution of longer programs.

Instead of compiling the program directly to BitVM CPU or BitVMX, the BitVM2 protocol requires creating a SNARK circuit to prove the execution of the program (setup), then proving the program execution for a particular input. Advanced ZK virtual machines such as ZKVM can alleviate the task of writing the circuit, by leveraging on compilers that accept procedural languages. Nevertheless, these rely on STARK provers, so the SNARK verifier must verify the execution of a STARK verifier which in turn verifies the execution of the program.

This technique has both benefits and disadvantages. For complex programs, such as sidechain withdrawal verifications, there are several benefits of running a SNARK verifier. If the input is long, and there is no need to make the input publicly available, then the SNARK circuit can accept the input as a private witness.

BitVMX requires all inputs to be public and signed (data availability of the input can be proven instead, but it’s costly and also requires the use of SNARKs).

The second benefit is program size: with recursive SNARKs, the verifier trace is constant size and does not depend on the length of the computation. The drawback of using SNARKs or SNARKs+STARKS is the complexity (amount of code involved) and attack surface. All the compilers, provers, and verifiers may contain bugs. Therefore executing a SNARK is riskier.

Since BitVMX can also run a SNARK verifier, for complex programs the questions comes down to which SNARK verifier (BitVMX or BitVM2) is better in terms of round complexity and transaction cost. With the information available today on BitVM2, it seems that BitVM2 requires fewer on-chain rounds, but parties must pay a higher transaction cost.

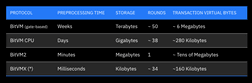

Using the limited data available regarding BitVM, we extrapolate it to show a comparison of each protocol executing a dispute over a SNARK verification, assuming it requires 1 billion steps. Round and transaction costs represent worse cases. Preprocessing time is based on a single-thread process running on a standard laptop.

(*) For the comparison, we use a BitVMX protocol using a 4-way search, and a hashing function split into 8 challengeable sub-functions.

Stay updated

To stay updated about the research and innovations regarding BitVMX, make sure to join the official BitVMX Builders telegram group to follow and contribute to the project’s progress.

Get involved in making Bitcoin work for everyone!